Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

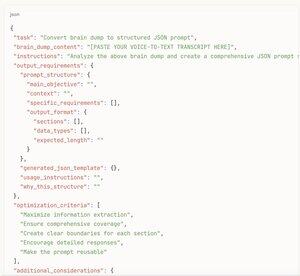

prompting in json or xml format increases LLM output by 10x

why

because structured formats give the model clear boundaries and expectations

when you ask for unstructured text, the LLM has to guess:

how long should this be?

what sections do I need?

when am I done?

what level of detail?

but with JSON/XML you're literally providing a template:

json{

"summary": "",

"key_points": [],

"analysis": "",

"recommendations": []

}

now the model knows exactly what to fill and roughly how much content each section needs

it's like the difference between "write something about cars" vs "fill out this car review form with these 12 specific fields"

the structure removes decision paralysis and gives the model permission to be comprehensive

plus JSON/XML naturally encourages the model to think in organized chunks rather than just streaming text until it feels "done"

[here's the meta hack]

don't even try to write structured prompts from scratch

instead:

1. voice-to-text brain dump everything you want analyzed/researched/written about

2. paste that messy transcript to the AI

3. ask it to "create a JSON prompt structure based on this brain dump that would get the best possible output"

4. take that generated JSON template

5. send it back as your actual prompt

you get all the benefits of structured prompting without having to think through the structure yourself

the AI knows what fields would be most useful better than you do anyway

turns your stream of consciousness into a professional-grade prompt in 30 seconds

try it yourself - ask for the same analysis in paragraph form vs structured format

the structured version will be 3-5x longer and way more detailed every time

17,17K

Johtavat

Rankkaus

Suosikit